Streaming ffmpeg with nodejs on web sockets to html

The last months we (with Sergi Lario) had some fun implementing a GUI to control a media server done by playmodes. We used web technologies and had the need to stream the content of the media server to our app. This article gave us the inspiration about how to easily stream video from ffmpeg to HTML5 canvas.

The last months we (with Sergi Lario) had some fun implementing a GUI to control a media server done by playmodes. We used web technologies and had the need to stream the content of the media server to our app. This article gave us the inspiration about how to easily stream video from ffmpeg to HTML5 canvas.

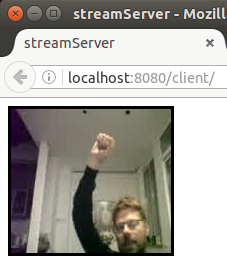

In resume, data gets streamed from ffmpeg through web sockets and is received frame by frame as Motion JPEG. Then we encoded the images as Base64, sent them over web sockets to the frontend and draw them to HTML.

Here follows a short description of the essential parts:

Nodejs starts a child process and receives the stream as Motion JPEG:

var ffmpeg = require('child_process').spawn("ffmpeg", [

"-re",

"-y",

"-i",

"udp://"+this.streamIP+":"+this.streamPort,

"-preset",

"ultrafast",

"-f",

"mjpeg",

"pipe:1"

]);

Actually this is the code to make it work on Linux (ubuntu 14.04 with ffmpeg 2.6), but I couldn’t make it run on Windows and really would appreciate any tips for that.

Data is received frame by frame and sent over web sockets (socket.io) to the frontend. Here it would be nice to sharpen images using ImageMagick, but I didn’t have success with that part:

ffmpeg.stdout.on('data', function (data) {

var frame = new Buffer(data).toString('base64');

io.sockets.emit('canvas',frame);

});

Finally the data is received on the frontend and drawn as CSS background to HTML:

this.socket.on('canvas', function(data) {

try {

$('#videostream').css('background', 'transparent url(data:image/jpeg;base64,'+data+') top left / 100% 100% no-repeat');

} catch(e){ }

});

As reported also by other users, the background CSS method seems a little choppy and inconsistent. I seams more promising to paint the stream directly into the canvas:

this.socket.on('canvas', function(data) {

try {

var canvas = document.getElementById('videostream');

var context = canvas.getContext('2d');

var imageObj = new Image();

imageObj.src = "data:image/jpeg;base64,"+data;

imageObj.onload = function(){

context.height = imageObj.height;

context.width = imageObj.width;

context.drawImage(imageObj,0,0,context.width,context.height);

}

} catch(e){ }

});

Howto

You find the test program here on github, these are the steps you have to follow to reproduce the described example:

1. Set up ffmpeg and make a test stream, for example using the webcam:

ffmpeg -f video4linux2 -i /dev/video0 -f h264 -vcodec libx264 -r 10 -s 160x144 -g 0 -b 800000 udp://127.0.0.1:1234

2. Adapt your directory and server IP and port and run node server program from server directory by typing:

node streamserver.js

3. Open browser, type your IP and port like http://localhost:8080/client/ and you should see your video stream embedded into the website.

Tags: canvas, ffmpeg, HTML5, javascript, nodejs, socket.io, stream, video

June 24th, 2015 at 2:48 pm

Hi there. Firstly thank you for the code above. I have got it working, but had an issue and wondered if you could help?

I am executing an ffmpeg command as above, but when it is updating the canvas background on the client side, the video is choppy and inconsistent.

I am not using a webcam / live video.

My end goal is to run a filter_complex in ffmpeg, creating a new video with inserted images, and streaming that to the client as it’s being built.

Do you know of any tips / examples that could help with this please?

Many thanks,

Dave.

November 24th, 2015 at 2:36 am

did you figure out how to make the video smooth? we are streaming live video via websockets and it is also jumpy and inconsistent.

April 12th, 2016 at 9:48 am

It seams better to draw the stream directly into the canvas instead of CSS background image. To do so, uncomment lines 33 to 41 and comment line 43.